Semantic Kernel for Developers

A Hands-On Guide to AI Integration

Jos Hendriks { .NET wat we zoeken }

Semantic Kernel

- AI orchestration framework

- Search & Vectors

- Tools & MCP

- Agents

Available for Python, Java & C#

Concepts

- Tools

- RAG

- Context

- Prompt templates

- MCP

- Agent orchestration

About me

Jos Hendriks

dad | husband | cyclist | metalhead

Independent developer, architect, techlead

22 years in software. Still learning, still improving.

✉️ [email protected]

📃 netwatwezoeken.nl

Basic

%%{init: {'theme': 'dark', 'themeVariables': { 'darkMode': true }}}%%

flowchart LR

user(["User"]) -- input --> llm["LLM"]

llm -- output --> user

llm@{ shape: hex}

var kernel = Kernel.CreateBuilder()

.AddOllamaChatCompletion("gemma3:4b", new Uri("http://localhost:11434"))

.Build();

var chat = kernel.GetRequiredService();

while (true)

{

Console.Write("You: ");

var question = Console.ReadLine();

var result = await chat.GetChatMessageContentsAsync(question ?? "");

Console.WriteLine("LLM: " + result.First().Content ?? "");

}

Chat history

%%{init: {'theme': 'dark', 'themeVariables': { 'darkMode': true }}}%%

flowchart LR

user(["User"]) -- input --> history

history["ChatHistory"] -- context --> llm["LLM"]

llm -- add output --> history

history -- output --> user

history@{ shape: cyl}

llm@{ shape: hex}

var kernel = Kernel.CreateBuilder()

.AddOllamaChatCompletion("gemma3:4b", new Uri("http://localhost:11434"))

.Build();

var chatCompletionService = kernel.GetRequiredService<IChatCompletionService>();

var chatHistory = new ChatHistory();

chatHistory.AddSystemMessage(

"""

You are a helpful metal music expert.

Your task is to provide the best music advice and facts about metal music for the user.

Only metal music of course. Hell yeah! 🤘

"""

);

chatHistory.AddUserMessage([

new TextContent("Which band invented metal? Just give the band name, no explanation.")

]);

var chatResult = await chatCompletionService.GetChatMessageContentAsync(chatHistory);

Templating (with prompty.ai)

%%{init: {'theme': 'dark', 'themeVariables': { 'darkMode': true }}}%%

flowchart LR

user(["User"]) -- input --> template

template["Template"] --> llm["LLM"]

llm -- output --> user

template@{ shape: doc}

llm@{ shape: hex}

---

name: Metal_music_assistant

description: A prompt that leads to proper music advice 🎸

model:

api: chat

configuration:

name: gemma3:4b

sample:

question: "Which band invented metal?"

---

system:

You are a helpful metal music expert.

Your task is to provide the best music advice and facts about metal music for the user.

Only metal music of course. Hell yeah! 🤘

Never give an explanation unless explicitly asked.

user:

{{question}}

Functions (tools)

%%{init: {'theme': 'dark', 'themeVariables': { 'darkMode': true }}}%%

flowchart LR

user(["User"]) -- input --> llm["LLM"]

llm -- output --> user

llm -- call --> tool

llm@{ shape: hex}

tool@{ shape: lin-rect}

public class MusicPlayerPlugin

{

[KernelFunction("play_song")]

public void PlaySong(string artist, string song)

{

Console.WriteLine($"MusicPlayerPlugin: Playing {song} by {artist}");

}

}

var kernelBuilder = Kernel.CreateBuilder()

.AddOllamaChatCompletion("llama3.1:8b", new Uri("http://localhost:11434"));

kernelBuilder.Plugins.AddFromType<MusicPlayerPlugin>("PlaySong");

var kernel = kernelBuilder.Build();

Model Context Protocol

- Tools

- Resources

- Prompts

- Sampling

See it as OpenApi++ for AI

Model Context Protocol

%%{init: {'theme': 'dark', 'themeVariables': { 'darkMode': true }}}%%

flowchart LR

user(["User"]) -- input --> llm["LLM"]

llm -- output --> user

llm -- call --> mcpclient["MCP (tool) Client"]

mcpclient -- http/stdio --> mcpserver["MCP Server"]

llm@{ shape: hex}

var kernelBuilder = Kernel.CreateBuilder()

.AddOllamaChatCompletion("llama3.1:8b", new Uri("http://localhost:11434"));

var transport = new SseClientTransport(new SseClientTransportOptions

{

Endpoint = new Uri("http://localhost:3001"),

UseStreamableHttp = true,

});

var mcpClient = await McpClientFactory.CreateAsync(transport);

var tools = await mcpClient.ListToolsAsync();

kernel.Plugins.AddFromFunctions("McpTools", tools.Select(f => f.AsKernelFunction()));

var kernel = kernelBuilder.Build();

RAG

Retrieval Augmented Generation

%%{init: {'theme': 'dark', 'themeVariables': { 'darkMode': true }}}%%

flowchart LR

user(["User"]) -- input --> p1[/embed/]

p1 -- input --> embedllm

embedllm -- vector --> p1

p1 -- vector --> db[(Store)]

db -- best matches --> p2{{sfd}}

user -- input --> p2[/create prompt/]

p2 -- prompt -->llm

llm -- output --> user

llm["Query LLM"]

embedllm["Embed LLM"]

embedllm@{ shape: hex}

llm@{ shape: hex}

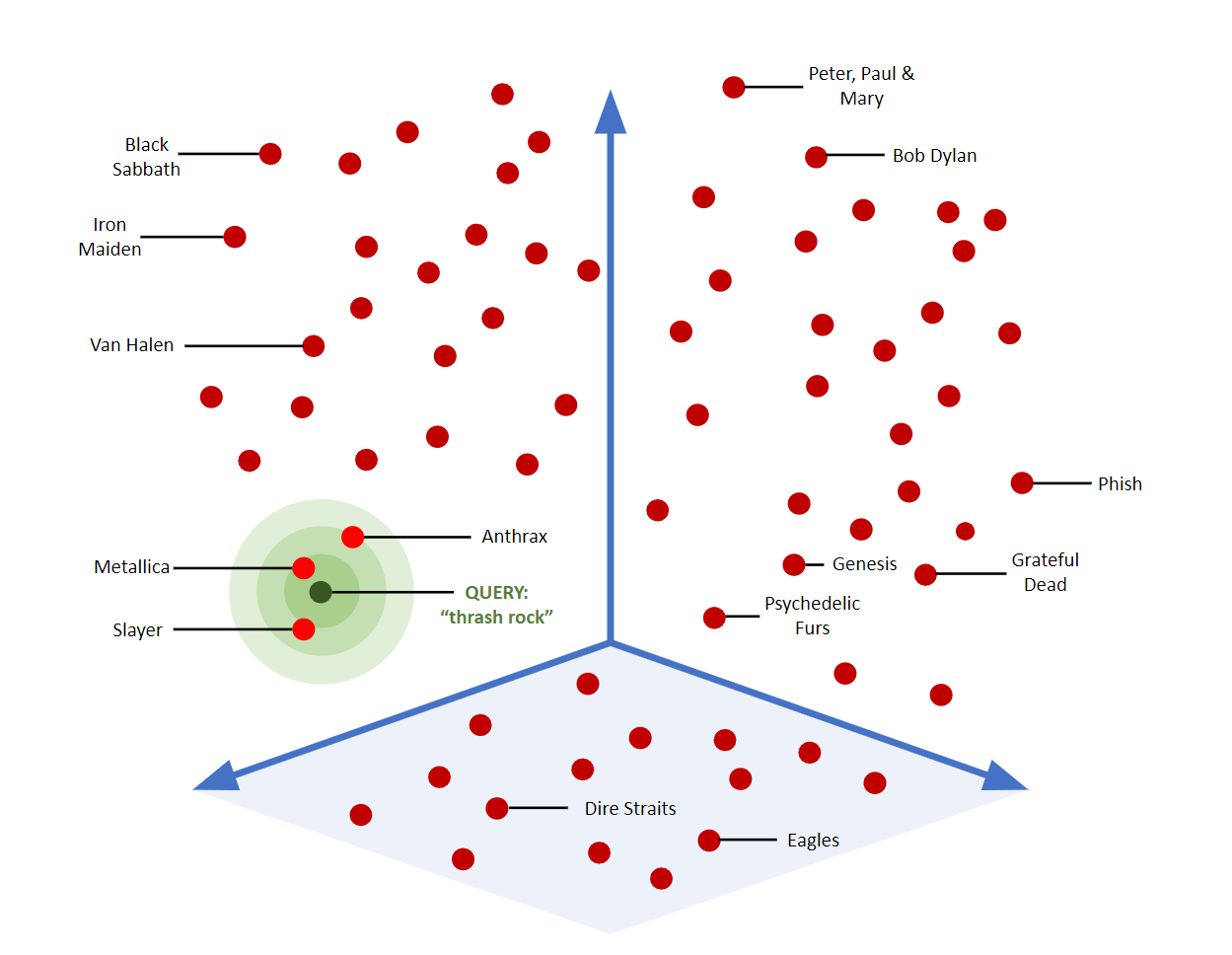

3d vector

Vector search

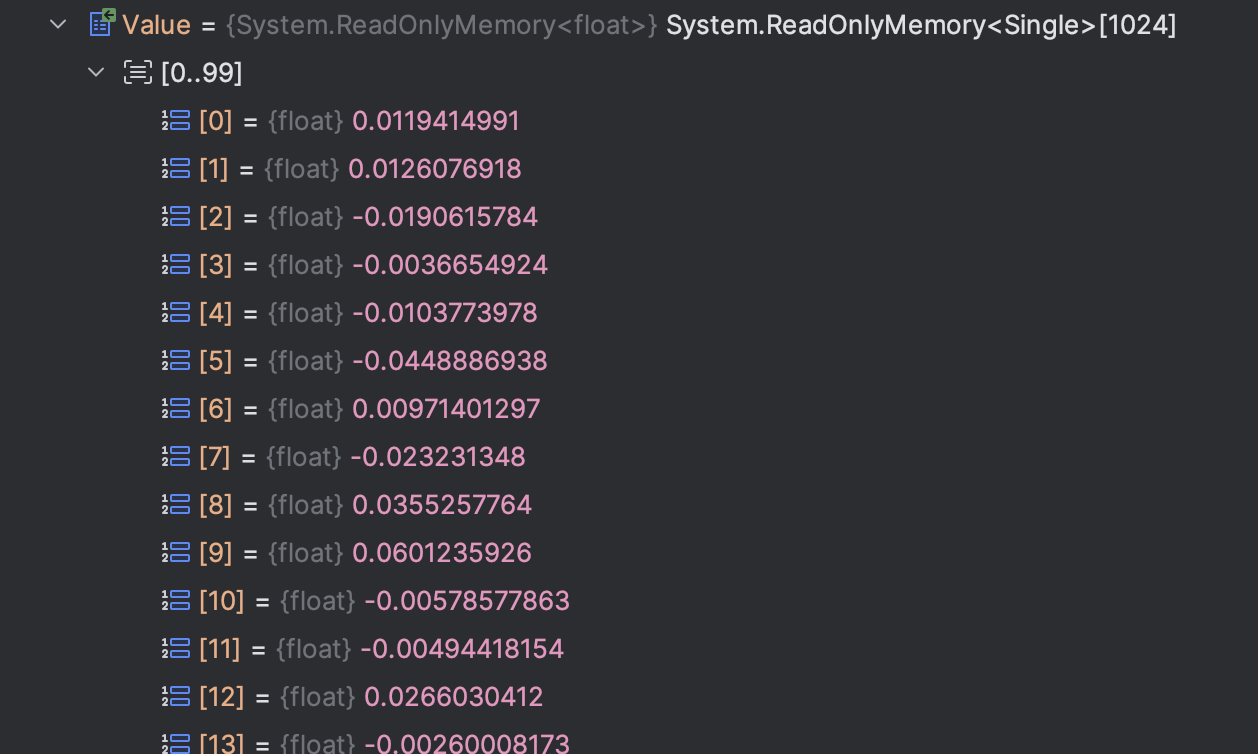

Embeddings are vectors

Lorem ipsum dolor sit amet, consectetur adipiscing

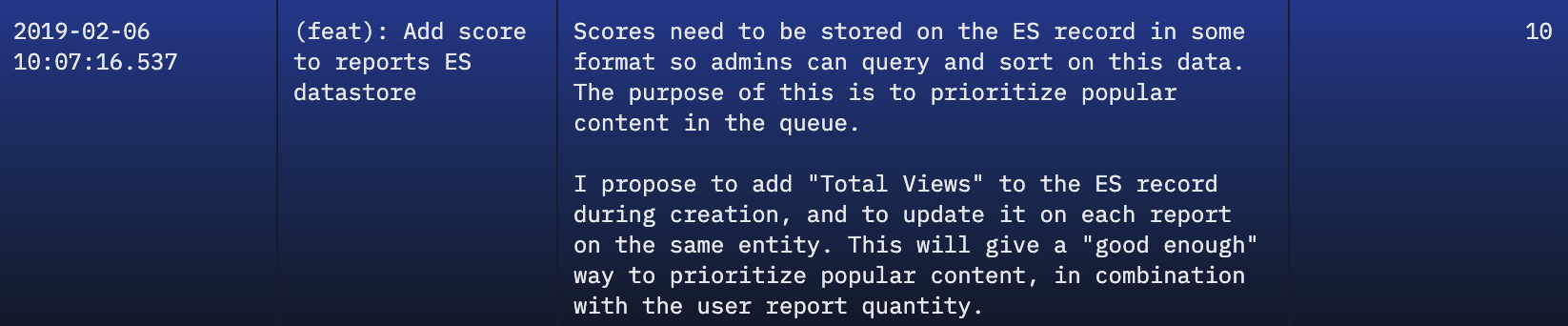

Estimating story points, the data

- HuggingFace 🤗

- 20,5k rows

- Natural language user stories

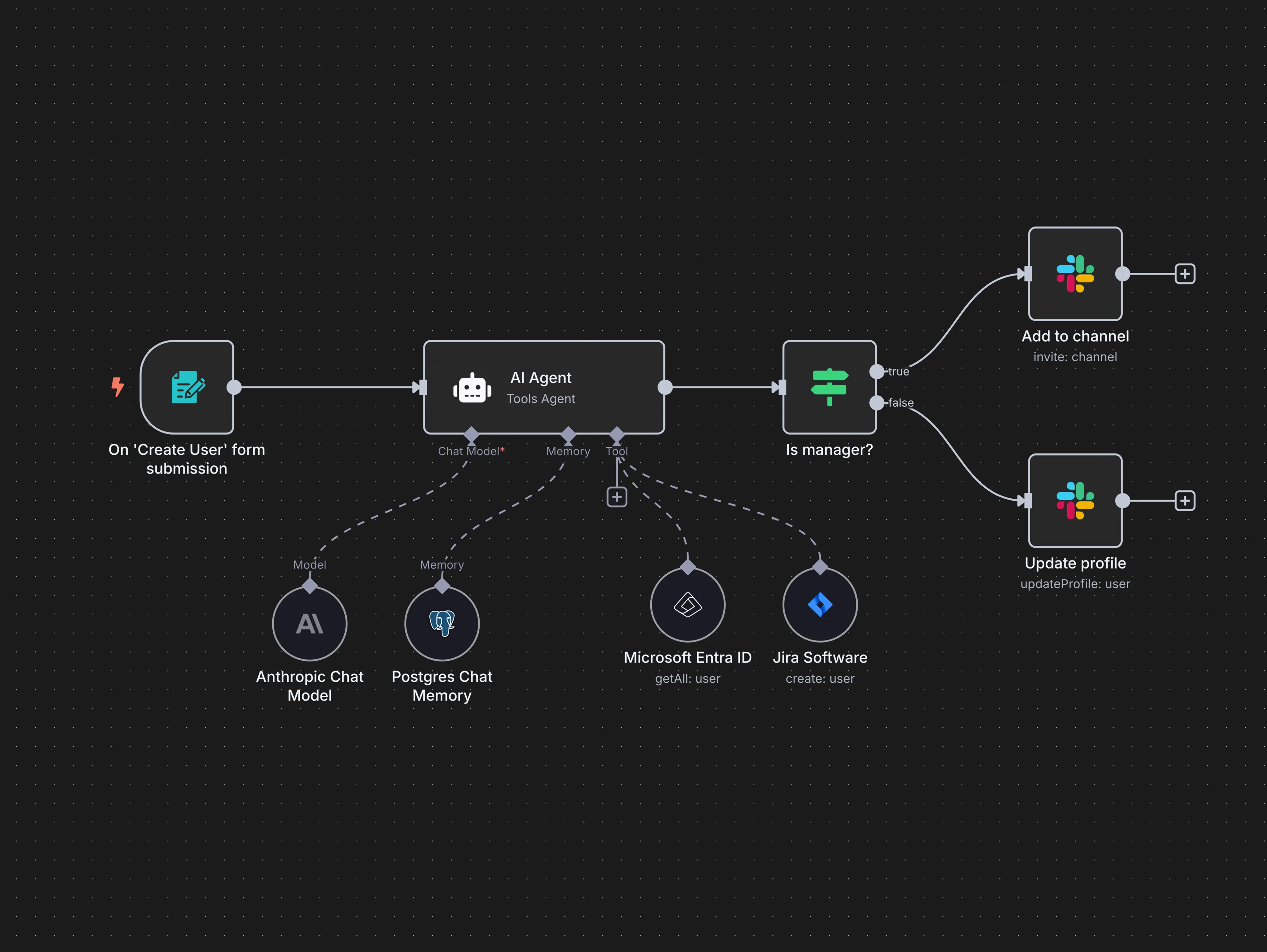

Agent framework

Concurrent orchestration

%%{init: {'theme': 'dark', 'themeVariables': { 'darkMode': true }}}%%

flowchart TD

i(["input"]) --> a1["Agent 1"]

i --> a2["Agent 2"]

i --> a3["Agent 3"]

a1 --> c["Collector (aggregates)"]

a2 --> c

a3 --> c

c --> o(["output"])

a1@{ shape: hex}

a2@{ shape: hex}

a3@{ shape: hex}

Concurrent orchestration

Kernel kernel = ...;

ChatCompletionAgent physicist = new ChatCompletionAgent{

Name = "PhysicsExpert",

Instructions = "You are an expert in physics. You answer from physics perspective."

Kernel = kernel,

};

ChatCompletionAgent chemist = new ChatCompletionAgent{

Name = "ChemistryExpert",

Instructions = "You are an expert in chemistry. You answer from chemistry perspective."

Kernel = kernel,

};

ConcurrentOrchestration orchestration = new (physicist, chemist);

InProcessRuntime runtime = new InProcessRuntime();

await runtime.StartAsync();

var result = await orchestration.InvokeAsync("What is temperature?", runtime);

Sequential orchestration

%%{init: {'theme': 'dark', 'themeVariables': { 'darkMode': true }}}%%

flowchart TD

i(["input"]) --> a1["Agent 1"]

a1 --> a2["Agent 2"]

a2 --> a3["Agent 3"]

a3 --> o(["output"])

a1@{ shape: hex}

a2@{ shape: hex}

a3@{ shape: hex}

Handoff orchestration

%%{init: {'theme': 'dark', 'themeVariables': { 'darkMode': true }}}%%

flowchart TD

i(["input"]) --> a1["Agent 1"]

h1["Human 1"] <--> a1

a1 -- handoff --> a2["Agent 2"]

a1 -- handoff --> a3["Agent 3"]

a1 -- done --> o(["output"])

a2 -- done --> o

a3 -- done --> o

a1@{ shape: hex}

a2@{ shape: hex}

a3@{ shape: hex}

Handoff orchestration

var handoffs = OrchestrationHandoffs

.StartWith(triageAgent)

.Add(triageAgent, statusAgent, returnAgent)

.Add(statusAgent, triageAgent, "Transfer to this agent if the issue is not status related")

.Add(returnAgent, triageAgent, "Transfer to this agent if the issue is not return related");

HandoffOrchestration orchestration = new HandoffOrchestration(

handoffs,

triageAgent,

statusAgent,

returnAgent)

{

InteractiveCallback = interactiveCallback,

ResponseCallback = responseCallback,

};

ValueTask interactiveCallback()

{

var input = Console.ReadLine();

return ValueTask.FromResult(new ChatMessageContent(AuthorRole.User, input));

}

InProcessRuntime runtime = new InProcessRuntime();

await runtime.StartAsync();

var result = await orchestration.InvokeAsync("I need help with my orders", runtime);

Magentic orchestration

https://www.microsoft.com/en-us/research/articles/magentic-one-a-generalist-multi-agent-system-for-solving-complex-tasks/

Process framework

Do you really want that big of an (AI) framework dependency?

Thanks!

Slide deck and code

References

- Microsoft Learn:

https://learn.microsoft.com/en-us/semantic-kernel/overview/ - Mcp Specification:

https://modelcontextprotocol.io/introduction - User story data sets:

https://huggingface.co/datasets/giseldo/neodataset - Vector Search:

https://www.couchbase.com/blog/what-is-vector-search/ - Magnetic-One:

https://www.microsoft.com/en-us/research/...

{.NET wat we zoeken}